RL for LLM 高质量文章汇总

RL for LLM 高质量文章汇总

算法

PPO

Proximal Policy Optimization Algorithms

日期:2017.08.28

从头理解PPO(Proximal Policy Optimization):从公式到代码

图解大模型RLHF系列之:人人都能看懂的PPO原理与源码解读

RLOO

Back to Basics: Revisiting REINFORCE Style Optimization for Learning from Human Feedback in LLMs

日期:2024.02.22

大模型 | PPO 和 DPO 都不是 RLHF 的理想形式

一文对比4种 RLHF 算法:PPO, GRPO, RLOO, REINFORCE++

GRPO

DeepSeekMath: Pushing the Limits of Mathematical Reasoning in Open Language Models

日期:2024.04.27

强化学习小白理解GRPO(一):Deepseek R1和Qwen QwQ的制胜秘诀

为什么GRPO很容易训飞,训到一半reward就很容易突然掉下来?

ReMax

ReMax: A Simple, Effective, and Efficient Reinforcement Learning Method for Aligning Large Language Models

日期:2024.05.27

ReMax: 一种高效,可替代PPO的RLHF算法 (ICML2024)

DAPO

DAPO: An Open-Source LLM Reinforcement Learning System at Scale

日期:2025.05.20

CISPO

MiniMax-M1: Scaling Test-Time Compute Efficiently with Lightning Attention

日期:2025.06.16

GSPO

Group Sequence Policy Optimization

日期:2025.07.28

REINFORCE++

REINFORCE++: An Efficient RLHF Algorithm with Robustness to Both Prompt and Reward Models

日期:2025.08.03

RLHF 对齐之 REINFORCE++ 算法 - 比 GRPO 稳定比PPO快

主题

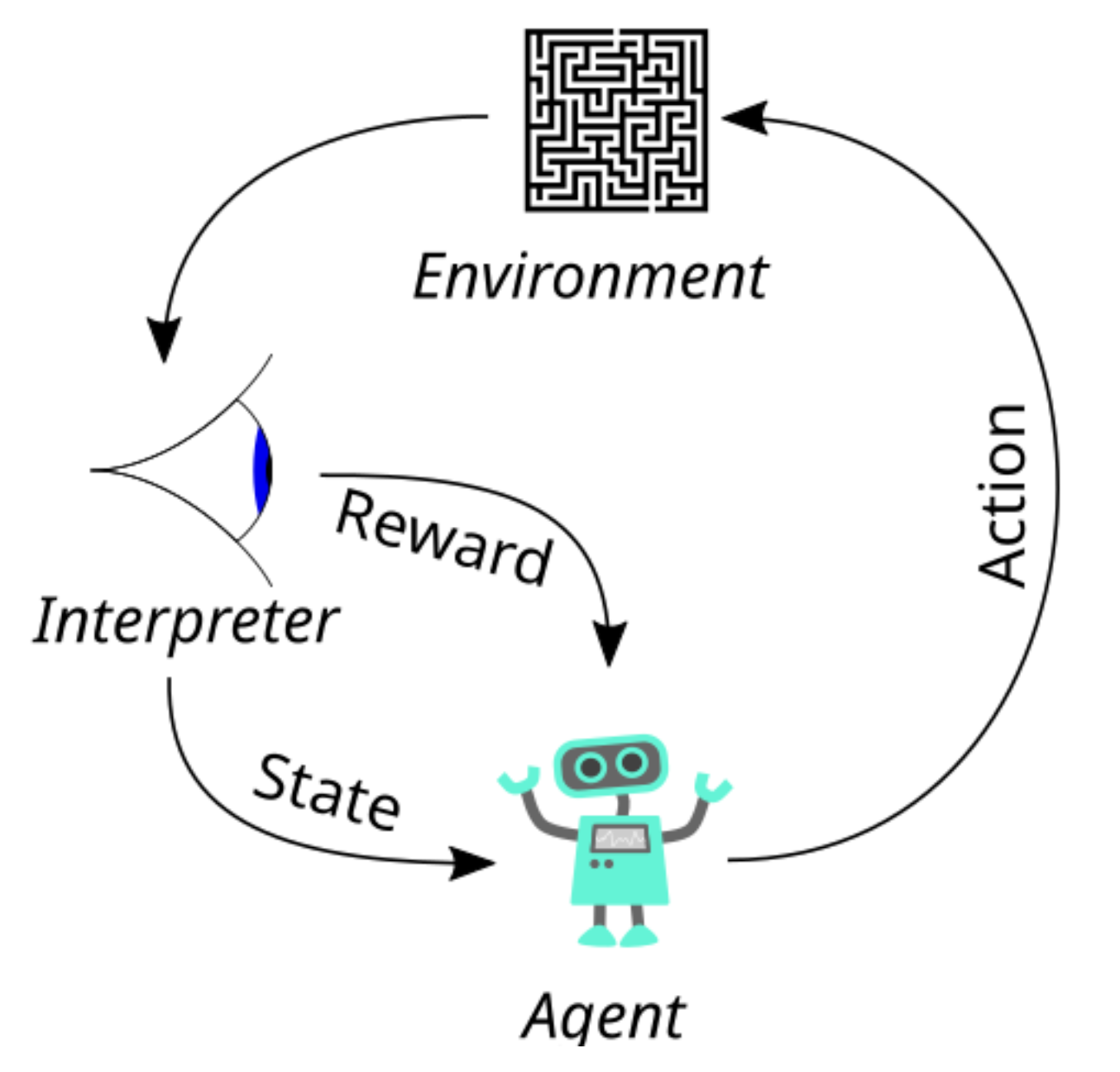

Agentic RL

综述:基于 LLM 的智能体强化学习(Agentic RL)

训推框架

RL Scaling 时代,我们需要什么样的 RL 框架呢?

基于 torch-memory-savor 浅析 CUDA Graph

训推差异

Your Efficient RL Framework Secretly Brings You Off-Policy RL Training

Small Leak Can Sink a Great Ship—Boost RL Training on MoE with 𝑰𝒄𝒆𝑷𝒐𝒑!

When Speed Kills Stability: Demystifying RL Collapse from the Training-Inference Mismatch

小米论文提出的R3(Rollout Routing Replay)解决了大模型的什么问题?

训推优化

参数更新

NeMo-RL: Journey of Optimizing Weight Transfer in Large MoE Models by 10x

tokenize & retokenization

1.从 tokenizer 视角来分析 Agentic 多轮训练的复杂性

2.No More Retokenization Drift: Returning Token IDs via the OpenAI Compatible API Matters in Agent RL

概念

框架

TRL

OpenRLHF

OpenRLHF源码解读:2.PPO训练Experience数据采样过程

veRL

slime

AREAL

NVIDIA-NeMo/RL

ROLL

EasyR1

Flash-RL

综述

A Survey of Reinforcement Learning for Large Reasoning Models

The Landscape of Agentic Reinforcement Learning for LLMs: A Survey